Capturing Hands in Action using Discriminative Salient Points and Physics Simulation

Dimitrios Tzionas Luca Ballan Abhilash Srikantha Pablo Aponte Marc Pollefeys Juergen Gall

Abstract

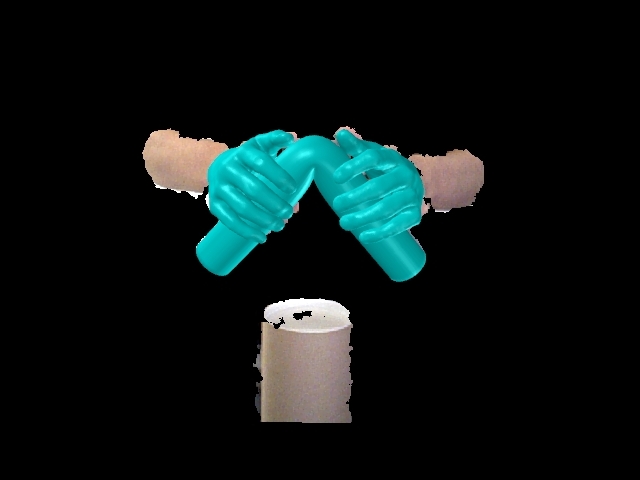

Hand

motion capture is a popular research field, recently gaining more

attention due to the ubiquity of RGB-D sensors. However, even most

recent approaches focus on the case of a single isolated hand. In this

work, we focus on hands that interact with other hands or objects and

present a framework that successfully captures motion in such

interaction scenarios for both rigid and articulated objects. Our

framework combines a generative model with discriminatively trained

salient points to achieve a low tracking error and with collision

detection and physics simulation to achieve physically plausible

estimates even in case of occlusions and missing visual data. Since all

components are unified in a single objective function which is almost

everywhere differentiable, it can be optimized with standard

optimization techniques. Our approach works for monocular RGB-D

sequences as well as setups with multiple synchronized RGB cameras. For

a qualitative and quantitative evaluation, we captured 29 sequences

with a large variety of interactions and up to 150 degrees of freedom.

Publications

Tzionas, D., Ballan, L., Srikantha, A., Aponte, P., Pollefeys, M. and Gall, J.

Capturing Hands in Action using Discriminative Salient Points and Physics Simulation [PDF] [arXiv] [Springer] [BibTex]

International Journal of Computer Vision (IJCV)

Special issue "Human Activity Understanding from 2D and 3D data" (link)

(Submitted on 17.10.14 / Accepted on 16.02.2016)

Tzionas, D., Srikantha, A., Aponte, P. and Gall, J.

Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points [PDF] [Web] [BibTex] [Sup1] [Sup2]

German Conference on Pattern Recognition (GCPR'14)

Ballan, L., Taneja, A., Gall, J., Van Gool, L. and Pollefeys, M.

Motion Capture of Hands in Action using Discriminative Salient Points [PDF] [Web] [BibTex] [Suppl.]

European Conference on Computer Vision (ECCV'12)

Capturing Hands in Action using Discriminative Salient Points and Physics Simulation [PDF] [arXiv] [Springer] [BibTex]

International Journal of Computer Vision (IJCV)

Special issue "Human Activity Understanding from 2D and 3D data" (link)

(Submitted on 17.10.14 / Accepted on 16.02.2016)

Tzionas, D., Srikantha, A., Aponte, P. and Gall, J.

Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points [PDF] [Web] [BibTex] [Sup1] [Sup2]

German Conference on Pattern Recognition (GCPR'14)

Ballan, L., Taneja, A., Gall, J., Van Gool, L. and Pollefeys, M.

Motion Capture of Hands in Action using Discriminative Salient Points [PDF] [Web] [BibTex] [Suppl.]

European Conference on Computer Vision (ECCV'12)

Videos

Datasets

Monocular RGB-D

(Update) Nov 2019: MANO fits on the data provided below and used in Hasson et al. ICCV'19

for sequences [01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 15, 16, 17, 18, 19, 20]:

MANO fits, Subject's personalized hand template and shape parameters.

Hand-Hand Interaction

The material in this section originate from the GCPR'14 work of Tzionas et al.

Sequences marked with (*) are used just for comparison with the FORTH tracker.

Model-files marked with (**) do not contain sequence-specific files (.SKEL and .MOTION)

Hand-Object Interaction

|

Moving a Ball with one hand | [All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Moving a Ball with two hands | [All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Bending a Pipe | [All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Bending a Rope |

[All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Moving a Ball with one hand and occlusion of a manipulating finger |

[All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Moving a Cube with one hand |

[All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Moving a Cube with one hand and occlusion of a manipulating finger |

[All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Failure Case - Seq NOT in dataset: |

some files below are common (dublicate links are inactive) | |

|

Moving a Ball with a hand platform (without fingertip detector) |

[All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Moving a Ball with a hand platform (with a fingertip detector) |

[All_Files] [Files_noPCL] [Preview] [Models/Motion] [Videos_In/Results] | |

|

Camera Calibration |

[Calibration] | |

|

Models (**) |

[All] [Hand_Right] [Hand_Left] [Ball] [Cube] [Pipe] [Rope] | |

|

Ground-Truth Joints |

[Contained above in *All_Files* or *Files_noPCL*] | |

|

Sequences Info |

[Dataset_ReadMe] [Dataset_SequencesINFO] | |

|

Fingertip Detector |

[Sequences_and_Ground_Truth] [Preview_Sequences_and_Ground_Truth] | |

|

Supplementary Videos |

[ResultsOverview_AllSequences]

[HandObjectInteraction_NewIJCVseq] [CollisionDetection] [DetectionsAssignmentsExample] [FingertipDetectorAnnotations] [FailueCase_DetectorOffOn] [FailueCase_DetectorOffOn_Slow] |

|

|

Software to visualize results in 3D |

[Files] or [Github] | |

|

Software to visualize groundtruth |

[Files] or [Github] |

The material in this section appear for the first time in this work.

Model-files marked with (**) do not contain sequence-specific files (.SKEL and .MOTION)

Multicamera RGB

Original datasets used in the paper (compressed using LJPG)

The material in this section originate from the ECCV'12 work of Ballan et al.

The Rope and Paper sequences though are first presented in this work.

The Rope and Paper sequences though are first presented in this work.

Related Projects

In chronological order:

3D Object Reconstruction from Hand-Object Interactions, ICCV 2015 [Web]

Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points, GCPR 2014 [Web]

A Comparison of Directional Distances for Hand Pose Estimation, GCPR 2013 [Web]

Citation

If you find the material in this website useful for your academic work, please cite this work.

Contact

If you have questions concerning the data, please contact:

| Dimitrios Tzionas |

for monocular RGB-D data/experiments | ||

| Luca Ballan |

for multicamera RGB data/experiments |

If you have general questions/comments concerning the paper or the website, please contact Dimitrios Tzionas