Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points

Dimitrios Tzionas and Abhilash Srikantha and Pablo Aponte and Juergen Gall----> Extended IJCV version of the project here <----

(accepted on 10.02.2016)

Abstract

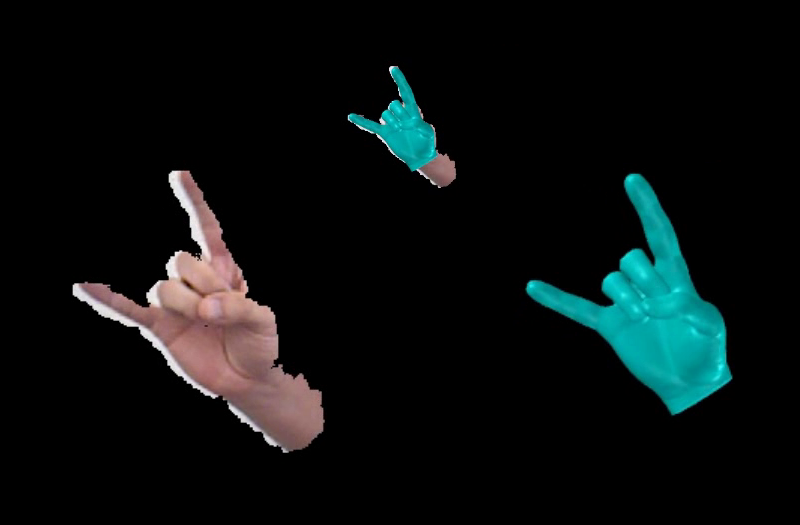

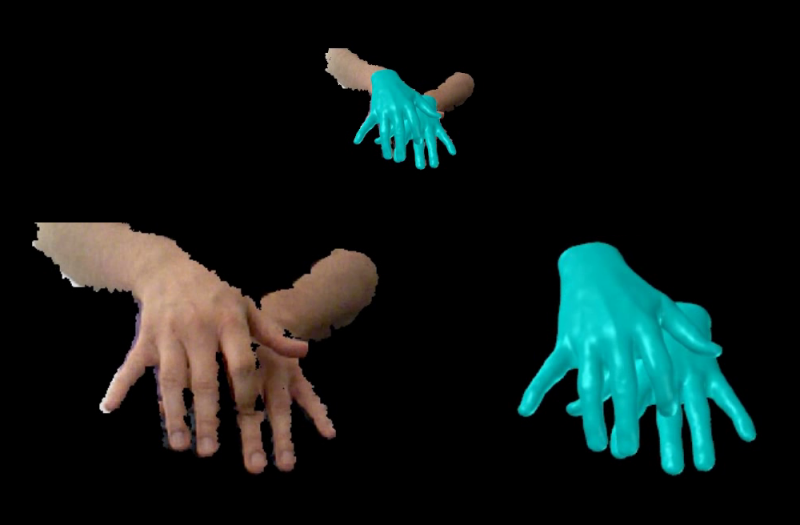

Hand

motion capture has been an active research topic in recent years,

following the success of full-body pose tracking. Despite similarities,

hand tracking proves to be more challenging, characterized by a higher

dimensionality, severe occlusions and self-similarity between fingers.

For this reason, most approaches rely on strong assumptions, like hands

in isolation or expensive multi-camera systems, that limit the

practical use. In this work, we propose a framework for hand tracking

that can capture the motion of two interacting hands using only a

single, inexpensive RGB-D camera. Our approach combines a generative

model with collision detection and discriminatively learned salient

points. We quantitatively evaluate our approach on 14 new sequences

with challenging interactions.

Publications

Tzionas,

D., Srikantha, A., Aponte, P. and Gall, J.

Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points (PDF, BibTex)

German Conference on Pattern Recognition (GCPR'14)

Supplementary Material: Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points (PDF, Files)

Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points (PDF, BibTex)

German Conference on Pattern Recognition (GCPR'14)

Supplementary Material: Capturing Hand Motion with an RGB-D Sensor, Fusing a Generative Model with Salient Points (PDF, Files)

Video

| YouTube | (up to 1080p resolution) |

| 1280x960 px | (44.3 MB) |

Data

Sequences marked with (*) are used just for comparison with the FORTH tracker.

Model-files marked with (**) do not contain sequence-specific files (.SKEL and .MOTION)

Presentation

| PPT | (124.0 mb) |

presentation ppt |

| PPT extraSlides | (161.0 mb) |

contains back-up slides for the Q&A section

|

Related projects

A Comparison of Directional Distances for Hand Pose Estimation, GCPR 2013. Link

Citation

If you find the material in this website useful for your academic work, please cite this work.

Contact

If

you have questions concerning the data, please contact me