Local Context Priors for Object Proposal Generation

Marko Ristin, Juergen Gall, and Luc Van Gool

Abstract

State-of-the-art methods for object detection are mostly based on an expensive exhaustive search over the image at different scales. In order to reduce the computational time, one can perform a selective search to obtain a small subset of relevant object hypotheses that need to be evaluated by the detector. For that purpose, we employ a regression to predict possible object scales and locations by exploiting the local context of an image. Furthermore, we show how a priori information, if available, can be integrated to improve the prediction. The experimental results on three datasets including the Caltech pedestrian and PASCAL VOC dataset show that our method achieves the detection performance of an exhaustive search approach with much less computational load. Since we model the prior distribution over the proposals locally, it generalizes well and can be successfully applied across datasets.

Images/Videos

|

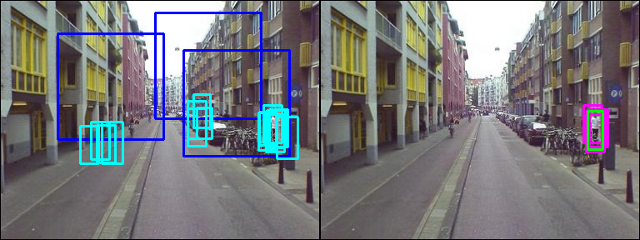

Example illustrating the local prior method. a) Exploration patches (blue) are generated uniformly at random and suggested windows (cyan) are sampled from the estimated distributions. b) Hits (ground truth is painted green, hits are painted purple). |

|

|

|

|

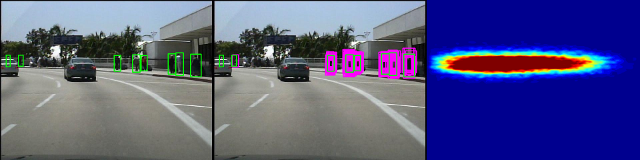

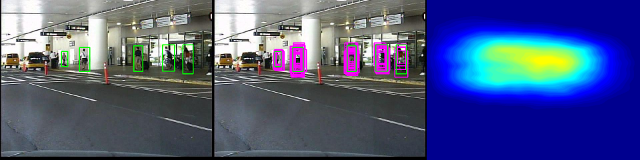

Ground truth bounding boxes (green); Suggested windows hitting the ground truth (purple); Estimated density over location and scale projected onto image. |

Publications

Ristin M., Gall J., and van Gool L., Local Context Priors for Object Proposal Generation (PDF), Asian Conference on Computer Vision (ACCV'12), To appear.