Drift-free Tracking of Rigid and Articulated Objects

Juergen Gall, Bodo Rosenhahn, and Hans-Peter Seidel

Abstract

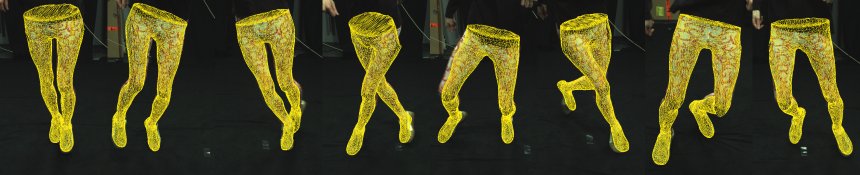

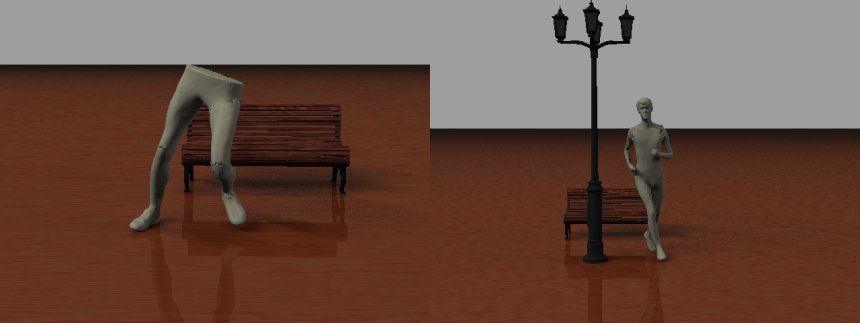

Model-based 3D tracker estimate the position, rotation, and joint angles of a given model from video data of one or multiple cameras. They often rely on image features that are tracked over time but the accumulation of small errors results in a drift away from the target object. In this work, we address the drift problem for the challenging task of human motion capture and tracking in the presence of multiple moving objects where the error accumulation becomes even more problematic due to occlusions. To this end, we propose an analysis-by-synthesis framework for articulated models. It combines the complementary concepts of patch-based and region-based matching to track both structured and homogeneous body parts. The performance of our method is demonstrated for rigid bodies, body parts, and full human bodies where the sequences contain fast movements, self-occlusions, multiple moving objects, and clutter. We also provide a quantitative error analysis and comparison with other model-based approaches.

Images/Videos

|

|

|

|

|

|

|

|

|

Video ~30MB ( AVI) |

|

Publications

Gall J., Rosenhahn B., and Seidel H.-P., Drift-free Tracking of Rigid and Articulated Objects (PDF), IEEE Conference on Computer Vision and Pattern Recognition (CVPR'08), 1-8, 2008. © IEEE

Gehrig S., Badino H., and Gall J.,Accurate and Model-Free Pose Estimation of Crash Test Dummies Human Motion - Understanding, Modeling, Capture and Animation, Klette R., Metaxas D., and Rosenhahn B. (Eds.), Computational Imaging and Vision, Springer, Vol 36, 453-473, 2008. © Springer-Verlag