Learning for Multi-View 3D Tracking in the Context of Particle Filters

Juergen Gall, Bodo Rosenhahn, Thomas Brox, and Hans-Peter Seidel

Abstract

In this paper we present an approach to use prior knowledge in the particle filter framework for 3D tracking, i.e. estimating the state parameters such as joint angles of a 3D object. The probability of the object's states, including correlations between the state parameters, is learned a priori from training samples. We introduce a framework that integrates this knowledge into the family of particle filters and particularly into the annealed particle filter scheme. Furthermore, we show that the annealed particle filter also works with a variational model for level set based image segmentation that does not rely on background subtraction and, hence, does not depend on a static background. In our experiments, we use a four camera set-up for tracking the lower part of a human body by a kinematic model with 18 degrees of freedom. We demonstrate the increased accuracy due to the prior knowledge and the robustness of our approach to image distortions. Finally, we compare the results of our multi-view tracking system quantitatively to the outcome of an industrial marker based tracking system.

Images/Video

|

|

Results for a walking sequence captured by four cameras. AVI) |

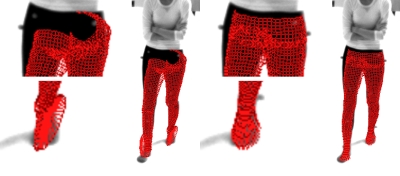

Tracking without prior (left) vs. prior with weighted distance (right). AVI) |

|

|

Occlusions by 30 random rectangles. (AVI) |

Results for a sequence with scissor jumps. (AVI) |

Publications

Gall J., Rosenhahn B., Brox T., and Seidel H.-P., Learning for Multi-View 3D Tracking in the Context of Particle Filters ( PDF), International Symposium on Visual Computing (ISVC'06), Springer, LNCS 4292, 59-69, 2006. ©Springer-Verlag