Abstract

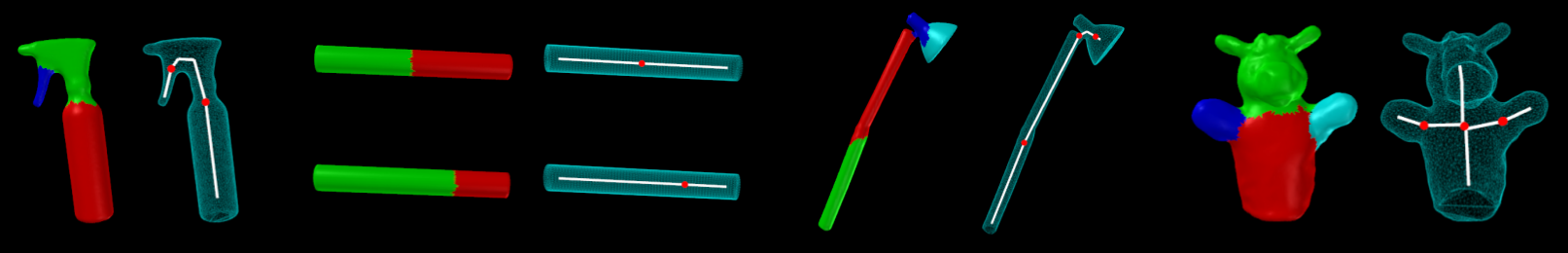

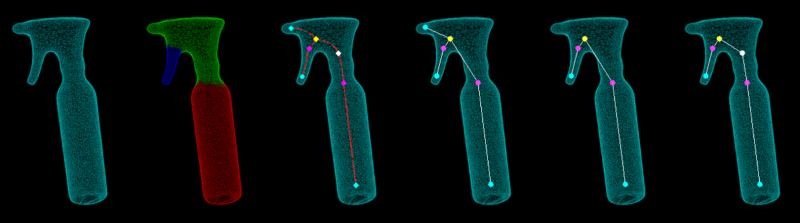

Although commercial and open-source software exist to reconstruct a static object from a sequence recorded with an RGB-D sensor, there is a lack of tools that build rigged models of articulated objects that deform realistically and can be used for tracking or animation.

In this work, we fill this gap and propose a method that creates a fully rigged model of an articulated object from depth data of a single sensor.

To this end, we combine deformable mesh tracking, motion segmentation based on spectral clustering and skeletonization based on mean curvature flow.

The fully rigged model then consists of a watertight mesh, embedded skeleton, and skinning weights.

Publications

Tzionas, D., and Gall, J.

Reconstructing Articulated Rigged Models from RGB-D Videos

European Conference on Computer Vision Workshops 2016 (ECCVW'16)

Workshop on Recovering 6D Object Pose (R6D'16)

[pdf] [suppl] [BibTex] [video] [poster/ppt]

Reconstructing Articulated Rigged Models from RGB-D Videos

European Conference on Computer Vision Workshops 2016 (ECCVW'16)

Workshop on Recovering 6D Object Pose (R6D'16)

[pdf] [suppl] [BibTex] [video] [poster/ppt]

Videos

YouTube

Datasets

|

Spray | [Frames] [Preview] [INput Model] [Deform. MoCap] [OUTput Artic. Model] [Videos_In/Out] | |

|

Donkey | [Frames] [Preview] [INput Model] [Deform. MoCap] [OUTput Artic. Model] [Videos_In/Out] | |

|

Lamp | [Frames] [Preview] [INput Model] [Deform. MoCap] [OUTput Artic. Model] [Videos_In/Out] | |

|

Pipe 1/2 | [Frames] [Preview] [INput Model] [Deform. MoCap] [OUTput Artic. Model] [Videos_In/Out] | |

|

Pipe 3/4 |

[Frames] [Preview] [INput Model] [Deform. MoCap] [OUTput Artic. Model] [Videos_In/Out] | |

|

IJCV Pipe |

[Frames] [Preview] [INput Model] [Deform. MoCap] [OUTput Artic. Model] [Videos_In/Out] | |

|

IJCV Rope |

[Frames] [Preview] [INput Model] [Deform. MoCap] [OUTput Artic. Model] [Videos_In/Out] | |

|

Camera Parameters |

[Camera] | |

|

Meshes (only .OFF) |

[Spray] [Donkey] [Lamp] [Pipe12] [Pipe34] [IJCV-Pipe] [IJCV-Rope] |

Related Projects

Capturing Hands in Action using Discriminative Salient Points and Physics Simulation, IJCV 2016 [Web]

Citation

If you find the material in this website useful for your academic work, please cite this work.

Contact

If you have general questions/comments concerning the paper or the website, please contact Dimitrios Tzionas